背景

在数据分析中pandas举足轻重,学习pandas最好的方法就是看官方文档,以下是根据官方文档10 Minutes to pandas学习记录。(官方标题10分钟,感觉起码得半个小时吧)

在pandas中主要有两种数据类型,可以简单的理解为:

- Series:一维数组

- DateFrame:二维数组(矩阵)

有了大概的概念之后,开始正式认识pandas:

首先要引入对应的包:

1 | import numpy as np |

新建对象 Object Creation

Series

可以通过传入一个

list对象来新建Series,其中空值为np.nan:1

2

3

4

5

6

7

8

9

10s = pd.Series([1,3,4,np.nan,7,9])

s

Out[5]:

0 1.0

1 3.0

2 4.0

3 NaN

4 7.0

5 9.0

dtype: float64pandas会默认创建一列索引index(上面的0-5)。我们也可以在创建时就指定索引:1

2

3

4

5

6

7

8

9pd.Series([1,3,4,np.nan,7,9], index=[1,1,2,2,'a',4])

Out[9]:

1 1.0

1 3.0

2 4.0

2 NaN

a 7.0

4 9.0

dtype: float64要注意的是,索引是可以重复的,也可以是字符。

DataFrame

新建一个DataFrame对象可以有多种方式:

通过传入一个numpy的数组、指定一个时间的索引以及一个列名。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16dates = pd.date_range('20190101', periods=6)

dates

Out[11]:

DatetimeIndex(['2019-01-01', '2019-01-02', '2019-01-03', '2019-01-04',

'2019-01-05', '2019-01-06'],

dtype='datetime64[ns]', freq='D')

df = pd.DataFrame(np.random.randn(6,4), index=dates, columns=list('ABCD'))

df

Out[18]:

A B C D

2019-01-01 0.671622 0.785726 0.392435 0.874692

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941

2019-01-03 1.364425 -0.947641 2.386880 0.585372

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345

2019-01-06 0.221597 -0.753038 -1.741256 0.287280通过传入一个dict对象

1

2

3

4

5

6

7

8

9

10

11

12

13df2 = pd.DataFrame({'A':1.,

'B':pd.Timestamp('20190101'),

'C':pd.Series(1, index=list(range(4)), dtype='float32'),

'D':np.array([3]*4, dtype='int32'),

'E':pd.Categorical(["test", "tain", "test", "train"]),

'F':'foo'})

df2

Out[27]:

A B C D E F

0 1.0 2019-01-01 1.0 3 test foo

1 1.0 2019-01-01 1.0 3 tain foo

2 1.0 2019-01-01 1.0 3 test foo

3 1.0 2019-01-01 1.0 3 train foo这里我们指定了不同的类型,可以通过如下查看:

1

2

3

4

5

6

7

8

9df2.dtypes

Out[28]:

A float64

B datetime64[ns]

C float32

D int32

E category

F object

dtype: object

可以看出DataFrame和Series一样,在没有指定索引时,会自动生成一个数字的索引,这在后续的操作中十分重要。

查看 Viewing Data

查看开头几行或者末尾几行:

1

2

3

4

5

6

7

8

9

10

11

12

13

14df.head()

Out[30]:

A B C D

2019-01-01 0.671622 0.785726 0.392435 0.874692

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941

2019-01-03 1.364425 -0.947641 2.386880 0.585372

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345

df.tail(3)

Out[31]:

A B C D

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345

2019-01-06 0.221597 -0.753038 -1.741256 0.287280可以通过添加行数参数来输出,默认为输出5行。

查看索引和列名

1

2

3

4

5

6

7df.index

Out[32]:

DatetimeIndex(['2019-01-01', '2019-01-02', '2019-01-03', '2019-01-04',

'2019-01-05', '2019-01-06'],

dtype='datetime64[ns]', freq='D')

df.columns

Out[33]: Index(['A', 'B', 'C', 'D'], dtype='object')使用

DataFrame.to_numpy()转化为numpy数据。需要注意的是由于numpy array类型数据只可包含一种格式,而DataFrame类型数据可包含多种格式,所以在转换过程中,pandas会找到一种可以处理DateFrame中国所有格式的numpy array格式,比如object。这个过程会耗费一定的计算量。1

2

3

4

5

6

7

8

9

10

11

12

13

14

15df.to_numpy()

Out[35]:

array([[ 0.67162219, 0.78572584, 0.39243527, 0.87469243],

[-2.42070338, -1.11620768, -0.34607048, 0.78594081],

[ 1.36442543, -0.94764138, 2.38688005, 0.58537186],

[-0.48597971, -1.28145415, 0.35406263, -1.41885798],

[-1.12271697, -2.78904135, -0.79181242, -0.17434484],

[ 0.22159737, -0.75303807, -1.74125564, 0.28728004]])

df2.to_numpy()

Out[36]:

array([[1.0, Timestamp('2019-01-01 00:00:00'), 1.0, 3, 'test', 'foo'],

[1.0, Timestamp('2019-01-01 00:00:00'), 1.0, 3, 'tain', 'foo'],

[1.0, Timestamp('2019-01-01 00:00:00'), 1.0, 3, 'test', 'foo'],

[1.0, Timestamp('2019-01-01 00:00:00'), 1.0, 3, 'train', 'foo']],

dtype=object)上面df全部为

float类型,所以转换会很快,而df2涉及多种类型转换,最后全部变成了object类型元素。查看数据的简要统计结果

1

2

3

4

5

6

7

8

9

10

11df.describe()

Out[37]:

A B C D

count 6.000000 6.000000 6.000000 6.000000

mean -0.295293 -1.016943 0.042373 0.156680

std 1.356107 1.144047 1.396030 0.860725

min -2.420703 -2.789041 -1.741256 -1.418858

25% -0.963533 -1.240143 -0.680377 -0.058939

50% -0.132191 -1.031925 0.003996 0.436326

75% 0.559116 -0.801689 0.382842 0.735799

max 1.364425 0.785726 2.386880 0.874692转置

1

2

3

4

5

6

7df.T

Out[38]:

2019-01-01 2019-01-02 2019-01-03 2019-01-04 2019-01-05 2019-01-06

A 0.671622 -2.420703 1.364425 -0.485980 -1.122717 0.221597

B 0.785726 -1.116208 -0.947641 -1.281454 -2.789041 -0.753038

C 0.392435 -0.346070 2.386880 0.354063 -0.791812 -1.741256

D 0.874692 0.785941 0.585372 -1.418858 -0.174345 0.287280按坐标轴排序,其中axis参数为坐标轴,axis默认为0,即横轴(对行排序),axis=1则为纵轴(对列排序);asceding参数默认为True,即升序排序,ascending=False则为降序排序:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18df.sort_index(axis=1)

Out[44]:

A B C D

2019-01-01 0.671622 0.785726 0.392435 0.874692

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941

2019-01-03 1.364425 -0.947641 2.386880 0.585372

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345

2019-01-06 0.221597 -0.753038 -1.741256 0.287280

df.sort_index(axis=1, ascending=False)

Out[45]:

D C B A

2019-01-01 0.874692 0.392435 0.785726 0.671622

2019-01-02 0.785941 -0.346070 -1.116208 -2.420703

2019-01-03 0.585372 2.386880 -0.947641 1.364425

2019-01-04 -1.418858 0.354063 -1.281454 -0.485980

2019-01-05 -0.174345 -0.791812 -2.789041 -1.122717

2019-01-06 0.287280 -1.741256 -0.753038 0.221597可见

df.sort_index(axis=1)是按列名升序排序,所以看起来没有变化,当设置ascending=False时,列顺序变成了DCBA。按数值排序:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18df.sort_values(by='B')

Out[46]:

A B C D

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941

2019-01-03 1.364425 -0.947641 2.386880 0.585372

2019-01-06 0.221597 -0.753038 -1.741256 0.287280

2019-01-01 0.671622 0.785726 0.392435 0.874692

df.sort_values(by='B', ascending=False)

Out[47]:

A B C D

2019-01-01 0.671622 0.785726 0.392435 0.874692

2019-01-06 0.221597 -0.753038 -1.741256 0.287280

2019-01-03 1.364425 -0.947641 2.386880 0.585372

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345

筛选 Selection

获取某列

1

2

3

4

5

6

7

8

9

10

11df['A']

Out[49]:

2019-01-01 0.671622

2019-01-02 -2.420703

2019-01-03 1.364425

2019-01-04 -0.485980

2019-01-05 -1.122717

2019-01-06 0.221597

Freq: D, Name: A, dtype: float64

type(df.A)

Out[52]: pandas.core.series.Series也可直接用

df.A,注意这里是大小写敏感的,这时候获取的是一个Series类型数据。选择多行

1

2

3

4

5

6

7

8

9

10

11

12df[0:3]

Out[53]:

A B C D

2019-01-01 0.671622 0.785726 0.392435 0.874692

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941

2019-01-03 1.364425 -0.947641 2.386880 0.585372

df['20190102':'20190104']

Out[54]:

A B C D

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941

2019-01-03 1.364425 -0.947641 2.386880 0.585372

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858通过一个

[]会通过索引对行进行切片,由于前面设置了索引为日期格式,所以可以方便的直接使用日期范围进行筛选。通过标签选择

选择某行

1

2

3

4

5

6

7df.loc[dates[0]]

Out[57]:

A 0.671622

B 0.785726

C 0.392435

D 0.874692

Name: 2019-01-01 00:00:00, dtype: float64选择指定行列的数据

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17df.loc[:, ('A', 'C')]

Out[58]:

A C

2019-01-01 0.671622 0.392435

2019-01-02 -2.420703 -0.346070

2019-01-03 1.364425 2.386880

2019-01-04 -0.485980 0.354063

2019-01-05 -1.122717 -0.791812

2019-01-06 0.221597 -1.741256

df.loc['20190102':'20190105', ('A', 'C')]

Out[62]:

A C

2019-01-02 -2.420703 -0.346070

2019-01-03 1.364425 2.386880

2019-01-04 -0.485980 0.354063

2019-01-05 -1.122717 -0.791812传入第一个参数是行索引标签范围,第二个是列索引标签,

:代表全部。选定某值

1

2

3

4df.loc['20190102', 'A']

Out[69]: -2.420703380445092

df.at[dates[1], 'A']

Out[70]: -2.420703380445092可以通过

loc[]和at[]两种方式来获取某值,但需要注意的是,由于行索引为datetime类型,使用loc[]方式获取时,可直接使用20190102字符串来代替,而在at[]中,必须传入datetime类型,否则会有报错:1

2

3

4

5

6

7df.at['20190102', 'A']

File "pandas/_libs/index.pyx", line 81, in pandas._libs.index.IndexEngine.get_value

File "pandas/_libs/index.pyx", line 89, in pandas._libs.index.IndexEngine.get_value

File "pandas/_libs/index.pyx", line 449, in pandas._libs.index.DatetimeEngine.get_loc

File "pandas/_libs/index.pyx", line 455, in pandas._libs.index.DatetimeEngine._date_check_type

KeyError: '20190102'

通过位置选择

选择某行

1

2

3

4

5

6

7df.iloc[3]

Out[71]:

A -0.485980

B -1.281454

C 0.354063

D -1.418858

Name: 2019-01-04 00:00:00, dtype: float64iloc[]方法的参数,必须是数值。选择指定行列的数据

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21df.iloc[3:5, 0:2]

Out[72]:

A B

2019-01-04 -0.485980 -1.281454

2019-01-05 -1.122717 -2.789041

df.iloc[:,:]

Out[73]:

A B C D

2019-01-01 0.671622 0.785726 0.392435 0.874692

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941

2019-01-03 1.364425 -0.947641 2.386880 0.585372

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345

2019-01-06 0.221597 -0.753038 -1.741256 0.287280

df.iloc[[1, 2, 4], [0, 2]]

Out[74]:

A C

2019-01-02 -2.420703 -0.346070

2019-01-03 1.364425 2.386880

2019-01-05 -1.122717 -0.791812同

loc[],:代表全部。选择某值

1

2

3

4df.iloc[1, 1]

Out[75]: -1.1162076820700824

df.iat[1, 1]

Out[76]: -1.1162076820700824可以通过

iloc[]和iat[]两种方法获取数值。

按条件判断选择

按某列的数值判断选择

1

2

3

4

5

6df[df.A > 0]

Out[77]:

A B C D

2019-01-01 0.671622 0.785726 0.392435 0.874692

2019-01-03 1.364425 -0.947641 2.386880 0.585372

2019-01-06 0.221597 -0.753038 -1.741256 0.287280筛选出符合要求的数据

1

2

3

4

5

6

7

8

9df[df > 0]

Out[78]:

A B C D

2019-01-01 0.671622 0.785726 0.392435 0.874692

2019-01-02 NaN NaN NaN 0.785941

2019-01-03 1.364425 NaN 2.386880 0.585372

2019-01-04 NaN NaN 0.354063 NaN

2019-01-05 NaN NaN NaN NaN

2019-01-06 0.221597 NaN NaN 0.287280不符合要求的数据均会被赋值为空

NaN。使用

isin()方法筛选1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25df2 = df.copy()

df2['E'] = ['one', 'one', 'two', 'three', 'four', 'three']

df2

Out[88]:

A B C D E

2019-01-01 0.671622 0.785726 0.392435 0.874692 one

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941 one

2019-01-03 1.364425 -0.947641 2.386880 0.585372 two

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858 three

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345 four

2019-01-06 0.221597 -0.753038 -1.741256 0.287280 three

df2['E'].isin(['two', 'four'])

Out[89]:

2019-01-01 False

2019-01-02 False

2019-01-03 True

2019-01-04 False

2019-01-05 True

2019-01-06 False

Freq: D, Name: E, dtype: bool

df2[df2['E'].isin(['two', 'four'])]

Out[90]:

A B C D E

2019-01-03 1.364425 -0.947641 2.386880 0.585372 two

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345 four注意:

isin必须严格一致才行,df中的默认数值小数点位数很长,并非显示的5位,为了方便展示,所以新增了E列。直接用原数值,情况如下,可看出[1,1]位置符合要求。1

2

3

4

5

6

7

8

9df.isin([-1.1162076820700824])

Out[95]:

A B C D

2019-01-01 False False False False

2019-01-02 False True False False

2019-01-03 False False False False

2019-01-04 False False False False

2019-01-05 False False False False

2019-01-06 False False False False

设定值

通过指定索引设定列

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20s1 = pd.Series([1, 2, 3, 4, 5, 6], index=pd.date_range('20190102', periods=6))

s1

Out[98]:

2019-01-02 1

2019-01-03 2

2019-01-04 3

2019-01-05 4

2019-01-06 5

2019-01-07 6

Freq: D, dtype: int64

df['F']=s1

df

Out[101]:

A B C D F

2019-01-01 0.671622 0.785726 0.392435 0.874692 NaN

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941 1.0

2019-01-03 1.364425 -0.947641 2.386880 0.585372 2.0

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858 3.0

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345 4.0

2019-01-06 0.221597 -0.753038 -1.741256 0.287280 5.0空值会自动填充为

NaN。通过标签设定值

1

2

3

4

5

6

7

8

9

10df.at[dates[0], 'A'] = 0

df

Out[103]:

A B C D F

2019-01-01 0.000000 0.785726 0.392435 0.874692 NaN

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941 1.0

2019-01-03 1.364425 -0.947641 2.386880 0.585372 2.0

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858 3.0

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345 4.0

2019-01-06 0.221597 -0.753038 -1.741256 0.287280 5.0通过为止设定值

1

2

3

4

5

6

7

8

9

10df.iat[0, 1] = 0

df

Out[105]:

A B C D F

2019-01-01 0.000000 0.000000 0.392435 0.874692 NaN

2019-01-02 -2.420703 -1.116208 -0.346070 0.785941 1.0

2019-01-03 1.364425 -0.947641 2.386880 0.585372 2.0

2019-01-04 -0.485980 -1.281454 0.354063 -1.418858 3.0

2019-01-05 -1.122717 -2.789041 -0.791812 -0.174345 4.0

2019-01-06 0.221597 -0.753038 -1.741256 0.287280 5.0通过

NumPy array设定值1

2

3

4

5

6

7

8

9

10df.loc[:, 'D'] = np.array([5] * len(df))

df

Out[109]:

A B C D F

2019-01-01 0.000000 0.000000 0.392435 5 NaN

2019-01-02 -2.420703 -1.116208 -0.346070 5 1.0

2019-01-03 1.364425 -0.947641 2.386880 5 2.0

2019-01-04 -0.485980 -1.281454 0.354063 5 3.0

2019-01-05 -1.122717 -2.789041 -0.791812 5 4.0

2019-01-06 0.221597 -0.753038 -1.741256 5 5.0通过条件判断设定值

1

2

3

4

5

6

7

8

9

10

11df2 = df.copy()

df2[df2 > 0] = -df2

df2

Out[112]:

A B C D F

2019-01-01 0.000000 0.000000 -0.392435 -5 NaN

2019-01-02 -2.420703 -1.116208 -0.346070 -5 -1.0

2019-01-03 -1.364425 -0.947641 -2.386880 -5 -2.0

2019-01-04 -0.485980 -1.281454 -0.354063 -5 -3.0

2019-01-05 -1.122717 -2.789041 -0.791812 -5 -4.0

2019-01-06 -0.221597 -0.753038 -1.741256 -5 -5.0

空值处理 Missing Data

pandas默认使用np.nan来表示空值,在统计计算中会直接忽略。

通过reindex()方法可以新增、修改、删除某坐标轴(行或列)的索引,并返回一个数据的拷贝:

1 | df1 = df.reindex(index=dates[0:4], columns=list(df.columns) + ['E']) |

删除空值

1

2

3

4df1.dropna(how='any')

Out[116]:

A B C D F E

2019-01-02 -2.420703 -1.116208 -0.34607 5 1.0 1.0填充空值

1

2

3

4

5

6

7df1.fillna(value=5)

Out[117]:

A B C D F E

2019-01-01 0.000000 0.000000 0.392435 5 5.0 1.0

2019-01-02 -2.420703 -1.116208 -0.346070 5 1.0 1.0

2019-01-03 1.364425 -0.947641 2.386880 5 2.0 5.0

2019-01-04 -0.485980 -1.281454 0.354063 5 3.0 5.0判断是否为空值

1

2

3

4

5

6

7pd.isna(df1)

Out[118]:

A B C D F E

2019-01-01 False False False False True False

2019-01-02 False False False False False False

2019-01-03 False False False False False True

2019-01-04 False False False False False True

运算 Operations

统计

注意 所有的统计默认是不包含空值的

平均值

默认情况是按列求平均值:

1

2

3

4

5

6

7

8df.mean()

Out[119]:

A -0.407230

B -1.147897

C 0.042373

D 5.000000

F 3.000000

dtype: float64如果需要按行求平均值,需指定轴参数:

1

2

3

4

5

6

7

8

9df.mean(1)

Out[120]:

2019-01-01 1.348109

2019-01-02 0.423404

2019-01-03 1.960733

2019-01-04 1.317326

2019-01-05 0.859286

2019-01-06 1.545461

Freq: D, dtype: float64数值移动

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20s = pd.Series([1, 3, 5, np.nan, 6, 8], index=dates)

s

Out[122]:

2019-01-01 1.0

2019-01-02 3.0

2019-01-03 5.0

2019-01-04 NaN

2019-01-05 6.0

2019-01-06 8.0

Freq: D, dtype: float64

s = s.shift(2)

s

Out[125]:

2019-01-01 NaN

2019-01-02 NaN

2019-01-03 1.0

2019-01-04 3.0

2019-01-05 5.0

2019-01-06 NaN

Freq: D, dtype: float64这里将

s的值移动两个,那么空出的部分会自动使用NaN填充。不同维度间的运算,

pandas会自动扩展维度:1

2

3

4

5

6

7

8

9df.sub(s, axis='index')

Out[128]:

A B C D F

2019-01-01 NaN NaN NaN NaN NaN

2019-01-02 NaN NaN NaN NaN NaN

2019-01-03 0.364425 -1.947641 1.386880 4.0 1.0

2019-01-04 -3.485980 -4.281454 -2.645937 2.0 0.0

2019-01-05 -6.122717 -7.789041 -5.791812 0.0 -1.0

2019-01-06 NaN NaN NaN NaN NaN

应用

通过

apply()方法,可以对数据进行逐一操作:累计求和

1

2

3

4

5

6

7

8

9df.apply(np.cumsum)

Out[130]:

A B C D F

2019-01-01 0.000000 0.000000 0.392435 5 NaN

2019-01-02 -2.420703 -1.116208 0.046365 10 1.0

2019-01-03 -1.056278 -2.063849 2.433245 15 3.0

2019-01-04 -1.542258 -3.345303 2.787307 20 6.0

2019-01-05 -2.664975 -6.134345 1.995495 25 10.0

2019-01-06 -2.443377 -6.887383 0.254239 30 15.0这里使用了

apply()方法调用np.cumsum方法,也可直接使用df.cumsum():1

2

3

4

5

6

7

8

9df.cumsum()

Out[133]:

A B C D F

2019-01-01 0.000000 0.000000 0.392435 5.0 NaN

2019-01-02 -2.420703 -1.116208 0.046365 10.0 1.0

2019-01-03 -1.056278 -2.063849 2.433245 15.0 3.0

2019-01-04 -1.542258 -3.345303 2.787307 20.0 6.0

2019-01-05 -2.664975 -6.134345 1.995495 25.0 10.0

2019-01-06 -2.443377 -6.887383 0.254239 30.0 15.0自定义方法

通过自定义函数,配合

apply()方法,可以实现更多数据处理:1

2

3

4

5

6

7

8df.apply(lambda x: x.max() - x.min())

Out[134]:

A 3.785129

B 2.789041

C 4.128136

D 0.000000

F 4.000000

dtype: float64

矩阵

统计矩阵中每个元素出现的频次:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23s = pd.Series(np.random.randint(0, 7, size=10))

s

Out[136]:

0 2

1 0

2 4

3 0

4 3

5 3

6 6

7 4

8 6

9 5

dtype: int64

s.value_counts()

Out[137]:

6 2

4 2

3 2

0 2

5 1

2 1

dtype: int64String方法

所有的Series类型都可以直接调用

str的属性方法来对每个对象进行操作。比如转换成大写:

1

2

3

4

5

6

7

8

9

10

11

12

13s = pd.Series(['A', 'B', 'C', 'Aaba', 'Baca', np.nan, 'CABA', 'dog', 'cat'])

s.str.upper()

Out[139]:

0 A

1 B

2 C

3 AABA

4 BACA

5 NaN

6 CABA

7 DOG

8 CAT

dtype: object分列:

1

2

3

4

5

6

7

8

9

10

11s = pd.Series(['A,b', 'c,d'])

s

Out[142]:

0 A,b

1 c,d

dtype: object

s.str.split(',', expand=True)

Out[143]:

0 1

0 A b

1 c d其他方法:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47dir(str)

Out[140]:

['capitalize',

'casefold',

'center',

'count',

'encode',

'endswith',

'expandtabs',

'find',

'format',

'format_map',

'index',

'isalnum',

'isalpha',

'isascii',

'isdecimal',

'isdigit',

'isidentifier',

'islower',

'isnumeric',

'isprintable',

'isspace',

'istitle',

'isupper',

'join',

'ljust',

'lower',

'lstrip',

'maketrans',

'partition',

'replace',

'rfind',

'rindex',

'rjust',

'rpartition',

'rsplit',

'rstrip',

'split',

'splitlines',

'startswith',

'strip',

'swapcase',

'title',

'translate',

'upper',

'zfill']

合并 Merge

pandas`可以提供很多方法可以快速的合并各种类型的Series、DataFrame以及Panel Object。

Concat方法

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44df = pd.DataFrame(np.random.randn(10, 4))

df

Out[145]:

0 1 2 3

0 -0.227408 -0.185674 -0.187919 0.185685

1 1.132517 -0.539992 1.156631 -0.022468

2 0.214134 -1.283055 -0.862972 0.518942

3 0.785903 1.033915 -0.471496 -1.403762

4 -0.676717 -0.529971 -1.161988 -1.265071

5 0.670126 1.320960 -0.128098 0.718631

6 0.589902 0.349386 0.221955 1.749188

7 -0.328885 0.607929 -0.973610 -0.928472

8 1.724243 -0.661503 -0.374254 0.409250

9 1.346625 0.618285 0.528776 -0.628470

# break it into pieces

pieces = [df[:3], df[3:7], df[7:]]

pieces

Out[147]:

[ 0 1 2 3

0 -0.227408 -0.185674 -0.187919 0.185685

1 1.132517 -0.539992 1.156631 -0.022468

2 0.214134 -1.283055 -0.862972 0.518942,

0 1 2 3

3 0.785903 1.033915 -0.471496 -1.403762

4 -0.676717 -0.529971 -1.161988 -1.265071

5 0.670126 1.320960 -0.128098 0.718631

6 0.589902 0.349386 0.221955 1.749188,

0 1 2 3

7 -0.328885 0.607929 -0.973610 -0.928472

8 1.724243 -0.661503 -0.374254 0.409250

9 1.346625 0.618285 0.528776 -0.628470]

pd.concat(pieces)

Out[148]:

0 1 2 3

0 -0.227408 -0.185674 -0.187919 0.185685

1 1.132517 -0.539992 1.156631 -0.022468

2 0.214134 -1.283055 -0.862972 0.518942

3 0.785903 1.033915 -0.471496 -1.403762

4 -0.676717 -0.529971 -1.161988 -1.265071

5 0.670126 1.320960 -0.128098 0.718631

6 0.589902 0.349386 0.221955 1.749188

7 -0.328885 0.607929 -0.973610 -0.928472

8 1.724243 -0.661503 -0.374254 0.409250

9 1.346625 0.618285 0.528776 -0.628470Merge方法

这是类似

sql的合并方法:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19left = pd.DataFrame({'key': ['foo', 'foo'], 'lval': [1, 2]})

right = pd.DataFrame({'key': ['foo', 'foo'], 'rval': [4, 5]})

left

Out[151]:

key lval

0 foo 1

1 foo 2

right

Out[152]:

key rval

0 foo 4

1 foo 5

pd.merge(left, right, on='key')

Out[153]:

key lval rval

0 foo 1 4

1 foo 1 5

2 foo 2 4

3 foo 2 5另一个例子:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17left = pd.DataFrame({'key': ['foo', 'bar'], 'lval': [1, 2]})

right = pd.DataFrame({'key': ['foo', 'bar'], 'rval': [4, 5]})

left

Out[156]:

key lval

0 foo 1

1 bar 2

right

Out[157]:

key rval

0 foo 4

1 bar 5

pd.merge(left, right, on='key')

Out[158]:

key lval rval

0 foo 1 4

1 bar 2 5Append方法

在DataFrame中增加行

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32df = pd.DataFrame(np.random.randn(8, 4), columns=['A', 'B', 'C', 'D'])

df

Out[160]:

A B C D

0 -0.496709 0.573449 0.076059 0.685285

1 0.479253 0.587376 -1.240070 -0.907910

2 -0.052609 -0.287786 -1.949402 1.163323

3 -0.659489 0.525583 0.820922 -1.368544

4 1.270453 -1.813249 0.059915 0.586703

5 1.859657 0.564274 -0.198763 -1.794173

6 -0.649153 -3.129258 0.063418 -0.727936

7 0.862402 -0.800031 -1.954784 -0.028607

s = df.iloc[3]

s

Out[162]:

A -0.659489

B 0.525583

C 0.820922

D -1.368544

Name: 3, dtype: float64

df.append(s, ignore_index=True)

Out[163]:

A B C D

0 -0.496709 0.573449 0.076059 0.685285

1 0.479253 0.587376 -1.240070 -0.907910

2 -0.052609 -0.287786 -1.949402 1.163323

3 -0.659489 0.525583 0.820922 -1.368544

4 1.270453 -1.813249 0.059915 0.586703

5 1.859657 0.564274 -0.198763 -1.794173

6 -0.649153 -3.129258 0.063418 -0.727936

7 0.862402 -0.800031 -1.954784 -0.028607

8 -0.659489 0.525583 0.820922 -1.368544这里要注意,我们增加了

ignore_index=True参数,如果不设置的话,那么增加的新行的index仍然是3,这样在后续的处理中可能有存在问题。具体也需要看情况来处理。1

2

3

4

5

6

7

8

9

10

11

12df.append(s)

Out[164]:

A B C D

0 -0.496709 0.573449 0.076059 0.685285

1 0.479253 0.587376 -1.240070 -0.907910

2 -0.052609 -0.287786 -1.949402 1.163323

3 -0.659489 0.525583 0.820922 -1.368544

4 1.270453 -1.813249 0.059915 0.586703

5 1.859657 0.564274 -0.198763 -1.794173

6 -0.649153 -3.129258 0.063418 -0.727936

7 0.862402 -0.800031 -1.954784 -0.028607

3 -0.659489 0.525583 0.820922 -1.368544

分组 Grouping

一般分组统计有三个步骤:

- 分组:选择需要的数据

- 计算:对每个分组进行计算

- 合并:把分组计算的结果合并为一个数据结构中

1 | df = pd.DataFrame({'A': ['foo', 'bar', 'foo', 'bar', |

按A列分组并使用sum函数进行计算:

1 | df.groupby('A').sum() |

这里由于B列无法应用sum函数,所以直接被忽略了。

按A、B列分组并使用sum函数进行计算:

1 | df.groupby(['A', 'B']).sum() |

这样就有了一个多层index的结果集。

整形 Reshaping

堆叠 Stack

python的zip函数可以将对象中对应的元素打包成一个个的元组:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45tuples = list(zip(['bar', 'bar', 'baz', 'baz',

'foo', 'foo', 'qux', 'qux'],

['one', 'two', 'one', 'two',

'one', 'two', 'one', 'two']))

tuples

Out[172]:

[('bar', 'one'),

('bar', 'two'),

('baz', 'one'),

('baz', 'two'),

('foo', 'one'),

('foo', 'two'),

('qux', 'one'),

('qux', 'two')]

## 设置两级索引

index = pd.MultiIndex.from_tuples(tuples, names=['first', 'second'])

index

Out[174]:

MultiIndex(levels=[['bar', 'baz', 'foo', 'qux'], ['one', 'two']],

codes=[[0, 0, 1, 1, 2, 2, 3, 3], [0, 1, 0, 1, 0, 1, 0, 1]],

names=['first', 'second'])

## 创建DataFrame

df = pd.DataFrame(np.random.randn(8, 2), index=index, columns=['A', 'B'])

df

Out[176]:

A B

first second

bar one -0.501215 -0.947993

two -0.828914 0.232167

baz one 1.245419 1.006092

two 1.016656 -0.441073

foo one 0.479037 -0.500034

two -1.113097 0.591696

qux one -0.014760 -0.320735

two -0.648743 1.499899

## 选取DataFrame

df2 = df[:4]

df2

Out[179]:

A B

first second

bar one -0.501215 -0.947993

two -0.828914 0.232167

baz one 1.245419 1.006092

two 1.016656 -0.441073使用

stack()方法,可以通过堆叠的方式将二维数据变成为一维数据:1

2

3

4

5

6

7

8

9

10

11

12

13stacked = df2.stack()

stacked

Out[181]:

first second

bar one A -0.501215

B -0.947993

two A -0.828914

B 0.232167

baz one A 1.245419

B 1.006092

two A 1.016656

B -0.441073

dtype: float64对应的逆操作为

unstacked()方法:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24stacked.unstack()

Out[182]:

A B

first second

bar one -0.501215 -0.947993

two -0.828914 0.232167

baz one 1.245419 1.006092

two 1.016656 -0.441073

stacked.unstack(1)

Out[183]:

second one two

first

bar A -0.501215 -0.828914

B -0.947993 0.232167

baz A 1.245419 1.016656

B 1.006092 -0.441073

stacked.unstack(0)

Out[184]:

first bar baz

second

one A -0.501215 1.245419

B -0.947993 1.006092

two A -0.828914 1.016656

B 0.232167 -0.441073unstack()默认对最后一层级进行操作,也可通过输入参数指定。表格转置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20df = pd.DataFrame({'A': ['one', 'one', 'two', 'three'] * 3,

'B': ['A', 'B', 'C'] * 4,

'C': ['foo', 'foo', 'foo', 'bar', 'bar', 'bar'] * 2,

'D': np.random.randn(12),

'E': np.random.randn(12)})

df

Out[190]:

A B C D E

0 one A foo -0.933264 -2.387490

1 one B foo -0.288101 0.023214

2 two C foo 0.594490 0.418505

3 three A bar 0.450683 1.939623

4 one B bar 0.243897 -0.965783

5 one C bar -0.705494 -0.078283

6 two A foo 1.560352 0.419907

7 three B foo 0.199453 0.998711

8 one C foo 1.426861 -1.108297

9 one A bar -0.570951 -0.022560

10 two B bar -0.350937 -1.767804

11 three C bar 0.983465 0.065792通过

pivot_table()方法可以很方便的进行行列的转换:1

2

3

4

5

6

7

8

9

10

11

12

13pd.pivot_table(df, values='D', index=['A', 'B'], columns=['C'])

Out[191]:

C bar foo

A B

one A -0.570951 -0.933264

B 0.243897 -0.288101

C -0.705494 1.426861

three A 0.450683 NaN

B NaN 0.199453

C 0.983465 NaN

two A NaN 1.560352

B -0.350937 NaN

C NaN 0.594490转换中,涉及到空值部分会自动填充为

NaN。

时间序列 Time Series

pandas的在时序转换方面十分强大,可以很方便的进行各种转换。

时间间隔调整

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48rng = pd.date_range('1/1/2019', periods=100, freq='S')

rng[:5]

Out[214]:

DatetimeIndex(['2019-01-01 00:00:00', '2019-01-01 00:00:01',

'2019-01-01 00:00:02', '2019-01-01 00:00:03',

'2019-01-01 00:00:04'],

dtype='datetime64[ns]', freq='S')

ts = pd.Series(np.random.randint(0, 500, len(rng)), index=rng)

ts.head(5)

Out[216]:

2019-01-01 00:00:00 245

2019-01-01 00:00:01 347

2019-01-01 00:00:02 113

2019-01-01 00:00:03 196

2019-01-01 00:00:04 131

Freq: S, dtype: int64

## 按10s间隔进行重新采样

ts1 = ts.resample('10S')

ts1

Out[209]: DatetimeIndexResampler [freq=<10 * Seconds>, axis=0, closed=left, label=left, convention=start, base=0]

## 用求平均的方式进行数据整合

ts1.mean()

Out[218]:

2019-01-01 00:00:00 174.0

2019-01-01 00:00:10 278.5

2019-01-01 00:00:20 281.8

2019-01-01 00:00:30 337.2

2019-01-01 00:00:40 221.0

2019-01-01 00:00:50 277.1

2019-01-01 00:01:00 171.0

2019-01-01 00:01:10 321.0

2019-01-01 00:01:20 318.6

2019-01-01 00:01:30 302.6

Freq: 10S, dtype: float64

## 用求和的方式进行数据整合

ts1.sum()

Out[219]:

2019-01-01 00:00:00 1740

2019-01-01 00:00:10 2785

2019-01-01 00:00:20 2818

2019-01-01 00:00:30 3372

2019-01-01 00:00:40 2210

2019-01-01 00:00:50 2771

2019-01-01 00:01:00 1710

2019-01-01 00:01:10 3210

2019-01-01 00:01:20 3186

2019-01-01 00:01:30 3026

Freq: 10S, dtype: int64这里先通过

resample进行重采样,在指定sum()或者mean()等方式来指定冲采样的处理方式。显示时区:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24rng = pd.date_range('1/1/2019 00:00', periods=5, freq='D')

rng

Out[221]:

DatetimeIndex(['2019-01-01', '2019-01-02', '2019-01-03', '2019-01-04',

'2019-01-05'],

dtype='datetime64[ns]', freq='D')

ts = pd.Series(np.random.randn(len(rng)), rng)

ts

Out[223]:

2019-01-01 -2.327686

2019-01-02 1.527872

2019-01-03 0.063982

2019-01-04 -0.213572

2019-01-05 -0.014856

Freq: D, dtype: float64

ts_utc = ts.tz_localize('UTC')

ts_utc

Out[225]:

2019-01-01 00:00:00+00:00 -2.327686

2019-01-02 00:00:00+00:00 1.527872

2019-01-03 00:00:00+00:00 0.063982

2019-01-04 00:00:00+00:00 -0.213572

2019-01-05 00:00:00+00:00 -0.014856

Freq: D, dtype: float64转换时区:

1

2

3

4

5

6

7

8ts_utc.tz_convert('US/Eastern')

Out[226]:

2018-12-31 19:00:00-05:00 -2.327686

2019-01-01 19:00:00-05:00 1.527872

2019-01-02 19:00:00-05:00 0.063982

2019-01-03 19:00:00-05:00 -0.213572

2019-01-04 19:00:00-05:00 -0.014856

Freq: D, dtype: float64时间格式转换

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27rng = pd.date_range('1/1/2019', periods=5, freq='M')

ts = pd.Series(np.random.randn(len(rng)), index=rng)

ts

Out[230]:

2019-01-31 0.197134

2019-02-28 0.569082

2019-03-31 -0.322141

2019-04-30 0.005778

2019-05-31 -0.082306

Freq: M, dtype: float64

ps = ts.to_period()

ps

Out[232]:

2019-01 0.197134

2019-02 0.569082

2019-03 -0.322141

2019-04 0.005778

2019-05 -0.082306

Freq: M, dtype: float64

ps.to_timestamp()

Out[233]:

2019-01-01 0.197134

2019-02-01 0.569082

2019-03-01 -0.322141

2019-04-01 0.005778

2019-05-01 -0.082306

Freq: MS, dtype: float64在是时间段和时间转换过程中,有一些很方便的算术方法可以使用,比如我们转换如下两个频率:

1、按季度划分,且每个年的最后一个月是11月。

2、按季度划分,每个月开始为频率一中下一个月的早上9点。

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30prng = pd.period_range('2018Q1', '2019Q4', freq='Q-NOV')

prng

Out[243]:

PeriodIndex(['2018Q1', '2018Q2', '2018Q3', '2018Q4', '2019Q1', '2019Q2',

'2019Q3', '2019Q4'],

dtype='period[Q-NOV]', freq='Q-NOV')

ts = pd.Series(np.random.randn(len(prng)), prng)

ts

Out[245]:

2018Q1 -0.112692

2018Q2 -0.507304

2018Q3 -0.324846

2018Q4 0.549671

2019Q1 -0.897732

2019Q2 1.130070

2019Q3 -0.399814

2019Q4 0.830488

Freq: Q-NOV, dtype: float64

ts.index = (prng.asfreq('M', 'e') + 1).asfreq('H', 's') + 9

ts

Out[247]:

2018-03-01 09:00 -0.112692

2018-06-01 09:00 -0.507304

2018-09-01 09:00 -0.324846

2018-12-01 09:00 0.549671

2019-03-01 09:00 -0.897732

2019-06-01 09:00 1.130070

2019-09-01 09:00 -0.399814

2019-12-01 09:00 0.830488

Freq: H, dtype: float64注意:这个例子有点怪。可以这样理解,我们先将

prng直接转换为按小时显示:1

2

3

4

5

6prng.asfreq('H', 'end')

Out[253]:

PeriodIndex(['2018-02-28 23:00', '2018-05-31 23:00', '2018-08-31 23:00',

'2018-11-30 23:00', '2019-02-28 23:00', '2019-05-31 23:00',

'2019-08-31 23:00', '2019-11-30 23:00'],

dtype='period[H]', freq='H')我们要把时间转换为下一个月的早上9点,所以先转换为按月显示,并每个月加1(即下个月),然后按小时显示并加9(早上9点)。

另外例子中

s参数是start的简写,e参数是end的简写,Q-NOV即表示按季度,且每年的NOV是最后一个月。更多了

freq简称可以参考:http://pandas.pydata.org/pandas-docs/stable/user_guide/timeseries.html#anchored-offsetsasfreq()方法介绍可参考:http://pandas.pydata.org/pandas-docs/stable/reference/api/pandas.DataFrame.asfreq.html#pandas-dataframe-asfreq

分类目录类型 Categoricals

关于Categories类型介绍可以参考:http://pandas.pydata.org/pandas-docs/stable/user_guide/categorical.html#categorical

类型转换:

astype('category')1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22df = pd.DataFrame({"id": [1, 2, 3, 4, 5, 6],

"raw_grade": ['a', 'b', 'b', 'a', 'a', 'e']})

df

Out[255]:

id raw_grade

0 1 a

1 2 b

2 3 b

3 4 a

4 5 a

5 6 e

df['grade'] = df['raw_grade'].astype('category')

df['grade']

Out[257]:

0 a

1 b

2 b

3 a

4 a

5 e

Name: grade, dtype: category

Categories (3, object): [a, b, e]重命名分类:

cat1

2

3

4

5

6

7

8

9

10

11df["grade"].cat.categories = ["very good", "good", "very bad"]

df['grade']

Out[269]:

0 very good

1 good

2 good

3 very good

4 very good

5 very bad

Name: grade, dtype: category

Categories (3, object): [very good, good, very bad]重分类:

1

2

3

4

5

6

7

8

9

10

11df['grade'] = df['grade'].cat.set_categories(["very bad", "bad", "medium","good", "very good"])

df['grade']

Out[271]:

0 very good

1 good

2 good

3 very good

4 very good

5 very bad

Name: grade, dtype: category

Categories (5, object): [very bad, bad, medium, good, very good]排列

1

2

3

4

5

6

7

8

9df.sort_values(by="grade")

Out[272]:

id raw_grade grade

5 6 e very bad

1 2 b good

2 3 b good

0 1 a very good

3 4 a very good

4 5 a very good分组

1

2

3

4

5

6

7

8

9df.groupby("grade").size()

Out[273]:

grade

very bad 1

bad 0

medium 0

good 2

very good 3

dtype: int64

画图 Plotting

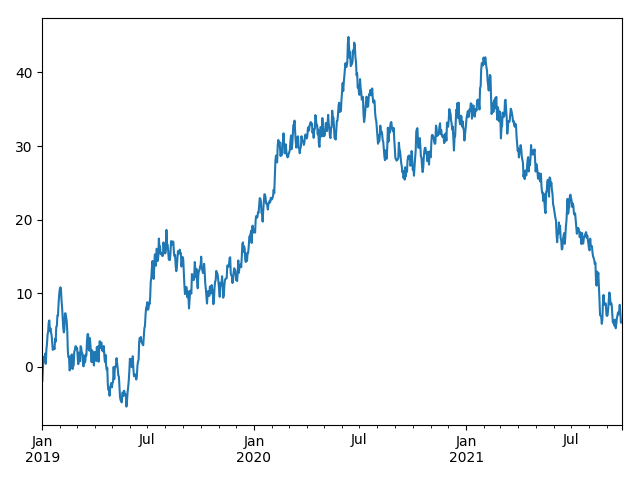

Series

1

2

3

4

5

6

7

8

9ts = pd.Series(np.random.randn(1000),

index=pd.date_range('1/1/2000', periods=1000))

ts = pd.Series(np.random.randn(1000),

index=pd.date_range('1/1/2019', periods=1000))

ts = ts.cumsum()

ts.plot()

Out[277]: <matplotlib.axes._subplots.AxesSubplot at 0x1135bcc50>

import matplotlib.pyplot as plt

plt.show()

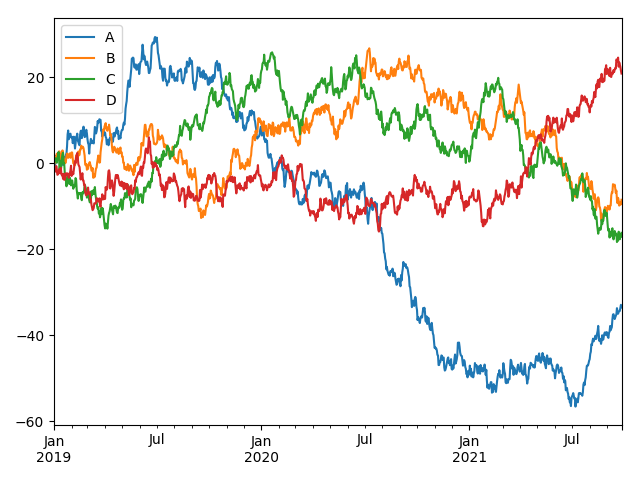

DataFrame画图

使用

plot可以把所有的列都通过标签的形式展示出来:1

2

3

4

5

6

7

8df = pd.DataFrame(np.random.randn(1000, 4), index=ts.index,

columns=['A', 'B', 'C', 'D'])

df = df.cumsum()

plt.figure()

Out[282]: <Figure size 640x480 with 0 Axes>

df.plot()

Out[283]: <matplotlib.axes._subplots.AxesSubplot at 0x11587e4e0>

plt.legend(loc='best')

导入导出数据 Getting Data In/Out

CSV

写入:

1

df.to_csv('foo.csv')

读取:

1

pd.read_csv('foo.csv')

HDF5

写入:

1

df.to_hdf('foo.h5', 'df')

读取:

1

pd.read_hdf('foo.h5', 'df')

Excel

写入:

1

df.to_excel('foo.xlsx', sheet_name='Sheet1')

读取:

1

pd.read_excel('foo.xlsx', 'Sheet1', index_col=None, na_values=['NA'])

异常处理 Gotchas

如果有一些异常情况比如:

1 | if pd.Series([False, True, False]): |

可以参考如下链接:

http://pandas.pydata.org/pandas-docs/stable/getting_started/basics.html#basics-compare

http://pandas.pydata.org/pandas-docs/stable/user_guide/gotchas.html#gotchas