背景

Flink是目前比较流行的流处理框架,可通过集群调用,也可通过api调用。可通过在本地搭建单flink集群进行开发测试。

基础环境搭建

- 系统:Mac OS

- java环境:安装java1.8及以上

flink本地集群搭建运行

安装flink

通过brew安装flink

1

brew install apache-flink

确认flink安装情况

1

2flink --version

Version: 1.8.0, Commit ID: 4caec0d确认安装路径

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16brew info apache-flink

apache-flink: stable 1.8.0, HEAD

Scalable batch and stream data processing

https://flink.apache.org/

/usr/local/Cellar/apache-flink/1.8.0 (155 files, 320.2MB) *

Built from source on 2019-09-25 at 09:47:27

From: https://mirrors.tuna.tsinghua.edu.cn/git/homebrew/homebrew-core.git/Formula/apache-flink.rb

==> Requirements

Required: java = 1.8 ✔

==> Options

--HEAD

Install HEAD version

==> Analytics

install: 1,780 (30 days), 5,638 (90 days), 17,424 (365 days)

install_on_request: 1,776 (30 days), 5,604 (90 days), 17,243 (365 days)

build_error: 0 (30 days)可见flink的安装目录为:/usr/local/Cellar/apache-flink/1.8.0

flink相关的启停脚本、日志都在这个目录下。

启动flink

进入flink安装目录

1

cd /usr/local/Cellar/apache-flink/1.8.0

运行启动脚本

1

2

3

4./libexec/bin/start-cluster.sh

Starting cluster.

Starting standalonesession daemon on host zhengkaideMacBook-Pro.local.

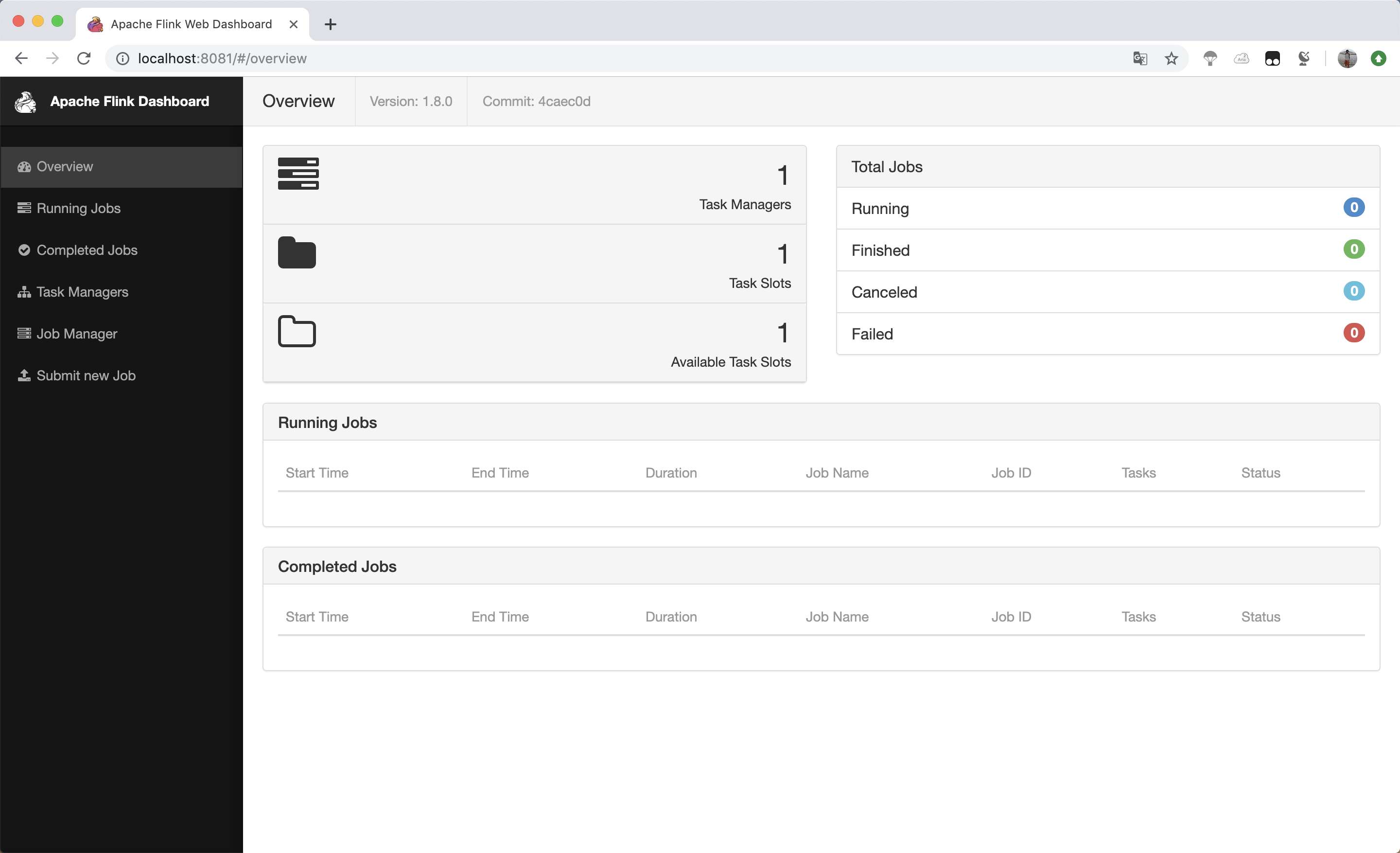

Starting taskexecutor daemon on host zhengkaideMacBook-Pro.local.打开网页查看:登陆http://localhost:8081即可

查看flink相关日志,在flink安装路径下的log目录内:

1

2

3

4

5

6

7

8

9

10

11$ tail libexec/log/flink-zhengk-standalonesession-0-zhengkaideMacBook-Pro.local.log

2019-09-26 10:53:59,030 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint - Rest endpoint listening at localhost:8081

2019-09-26 10:53:59,031 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint - http://localhost:8081 was granted leadership with leaderSessionID=00000000-0000-0000-0000-000000000000

2019-09-26 10:53:59,031 INFO org.apache.flink.runtime.dispatcher.DispatcherRestEndpoint - Web frontend listening at http://localhost:8081.

2019-09-26 10:53:59,118 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcService - Starting RPC endpoint for org.apache.flink.runtime.resourcemanager.StandaloneResourceManager at akka://flink/user/resourcemanager .

2019-09-26 10:53:59,137 INFO org.apache.flink.runtime.rpc.akka.AkkaRpcService - Starting RPC endpoint for org.apache.flink.runtime.dispatcher.StandaloneDispatcher at akka://flink/user/dispatcher .

2019-09-26 10:53:59,158 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager - ResourceManager akka.tcp://flink@localhost:6123/user/resourcemanager was granted leadership with fencing token 00000000000000000000000000000000

2019-09-26 10:53:59,159 INFO org.apache.flink.runtime.resourcemanager.slotmanager.SlotManager - Starting the SlotManager.

2019-09-26 10:53:59,170 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher - Dispatcher akka.tcp://flink@localhost:6123/user/dispatcher was granted leadership with fencing token 00000000-0000-0000-0000-000000000000

2019-09-26 10:53:59,172 INFO org.apache.flink.runtime.dispatcher.StandaloneDispatcher - Recovering all persisted jobs.

2019-09-26 10:53:59,496 INFO org.apache.flink.runtime.resourcemanager.StandaloneResourceManager - Registering TaskManager with ResourceID deb7db492d9f5d89bb6070517aeb5aa7 (akka.tcp://flink@192.168.1.104:61286/user/taskmanager_0) at ResourceManager

编写flink demo程序

如下是一个监听端口的流数据,并进行单词字数统计的demo,代码如下,需最终打包成一个可执行的jar包。

1 | package org.apache.flink; |

启动demo程序

单独开启一个终端,使用nc启动监听9000端口(这里端口可以更换)

1

$ nc -l 9000

通过flink启动demo程序,并指定端口为9000

1

2

3cd /usr/local/Cellar/apache-flink/1.8.0

./libexec/bin/flink run /Users/zhengk/IdeaProjects/flinkquickstartjava/target/flink-quickstart-java-1.0-SNAPSHOT.jar --port 9000

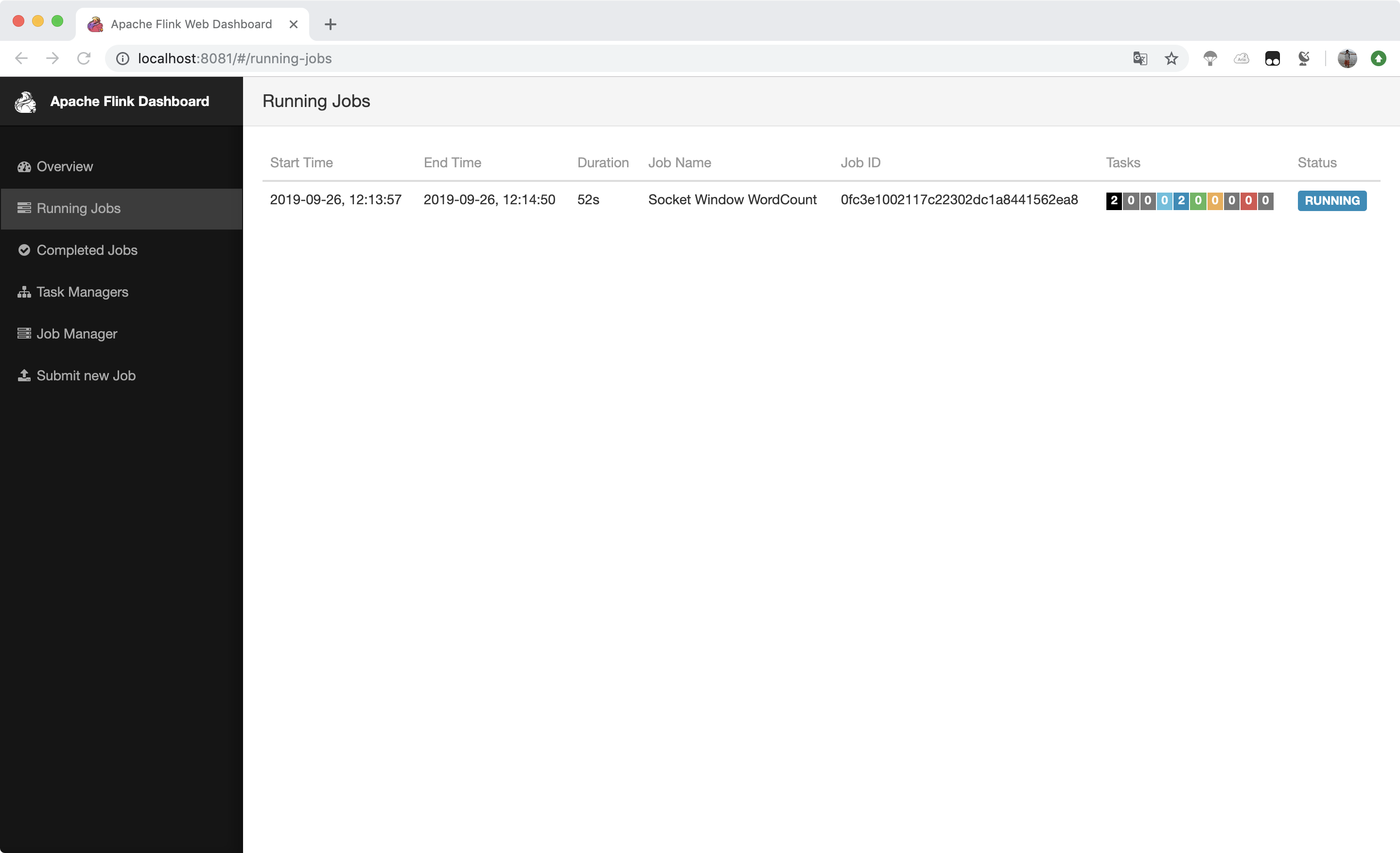

Starting execution of program通过网页查看运行情况:http://localhost:8081

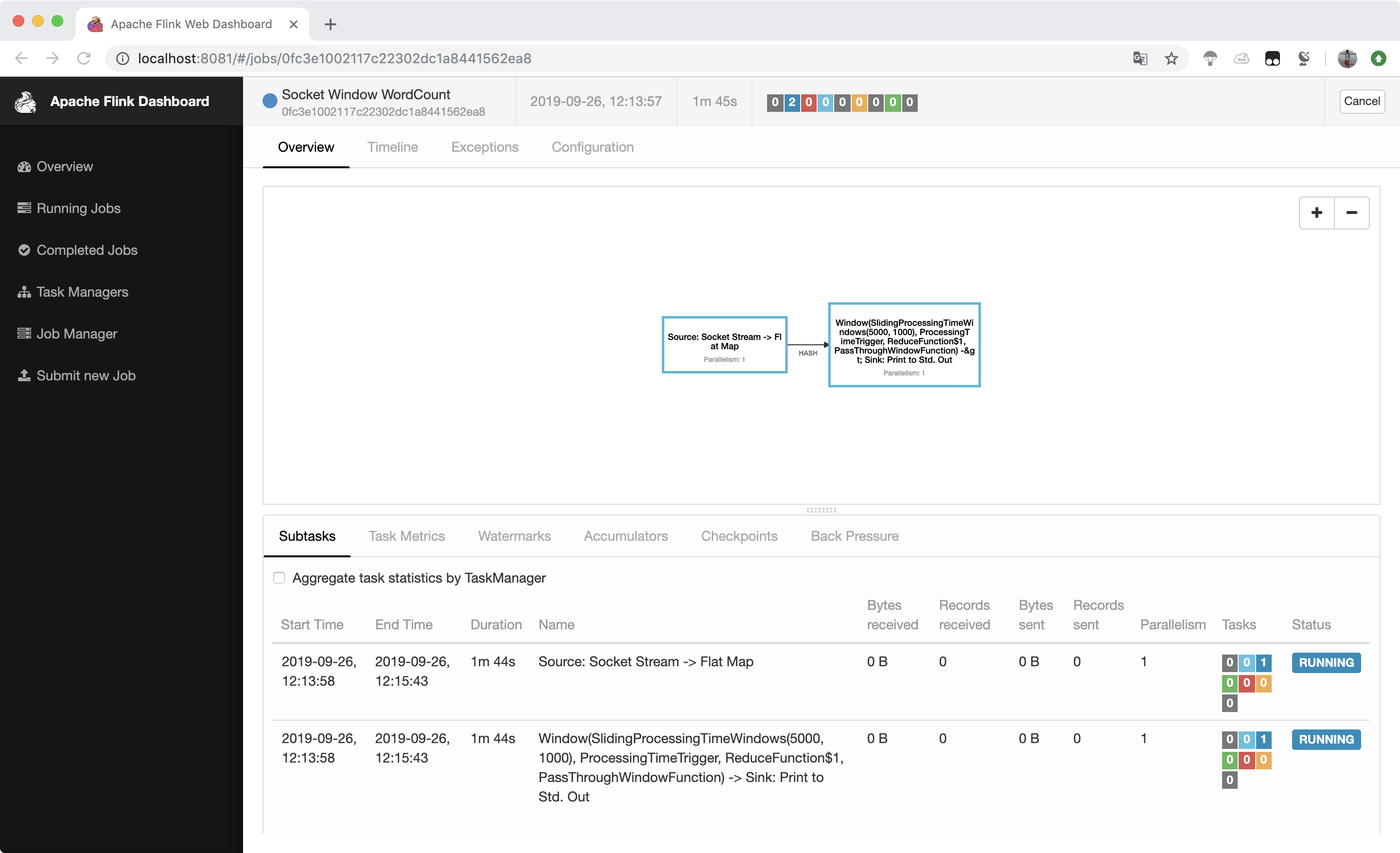

点击进去可查看详细信息:

在nc终端中输入一些文字(这里为随意输入,会通过流的方式)

1

2

3

4lorem ipsum

ipsum ipsum ipsum

bye

hello查看flink日志可观察字数统计的输出:

1

2

3

4

5

6

7

8

9

10

11

12

13cd /usr/local/Cellar/apache-flink/1.8.0

cd libexec/log

tail -f flink-zhengk-taskexecutor-0-zhengkaideMacBook-Pro.local.out

ipsum : 3

ipsum : 3

ipsum : 3

bye : 1

bye : 1

ipsum : 3

ipsum : 3

bye : 1

bye : 1

bye : 1

停止flink集群

进入flink安装目录

1

cd /usr/local/Cellar/apache-flink/1.8.0

运行停止脚本

1

2

3./libexec/bin/stop-cluster.sh

Stopping taskexecutor daemon (pid: 38310) on host zhengkaideMacBook-Pro.local.

Stopping standalonesession daemon (pid: 37893) on host zhengkaideMacBook-Pro.local.

扩展

官方教程如下:

https://ci.apache.org/projects/flink/flink-docs-release-1.9/getting-started/tutorials/local_setup.html